Artificial Intelligence (AI) has entered a new era with the rise of open-source large language models (LLMs). Among these, DeepSeek-R1 stands out as a groundbreaking innovation, challenging proprietary models like OpenAI’s GPT-4 and Anthropic’s Claude 3.5. With its unparalleled performance, cost efficiency, and open-source accessibility, DeepSeek-R1 is reshaping how businesses, developers, and researchers approach AI. In this blog post, we’ll explore the technical architecture, performance benchmarks, use cases, and ethical implications of DeepSeek-R1, providing a comprehensive guide to leveraging this transformative technology.

1. Introduction: The Rise of DeepSeek-R1

The AI landscape has long been dominated by proprietary models developed by tech giants like OpenAI, Google, and Anthropic. These models, while powerful, come with significant limitations: high costs, restricted access, and limited customization. Enter DeepSeek-R1, an open-source LLM that not only matches the performance of its proprietary counterparts but does so at a fraction of the cost.

Key Milestones

- Performance Parity: DeepSeek-R1 achieves 97.3% accuracy on the MATH-500 benchmark, rivaling GPT-4’s performance.

- Cost Efficiency: Trained for just 5.6million,comparedtoOpenAI’s5.6million,comparedtoOpenAI’s100 million budget for GPT-4.

- Open-Source Accessibility: Released under the MIT License, enabling commercial use and fine-tuning.

DeepSeek-R1 represents a paradigm shift in AI development, democratizing access to cutting-edge technology and empowering startups, researchers, and enterprises to innovate without the constraints of proprietary systems.

2. Technical Architecture & Innovations

At the heart of DeepSeek-R1’s success lies its innovative technical architecture. Let’s break down the key components that make this model a game-changer.

Mixture-of-Experts (MoE) Design

DeepSeek-R1 employs a Mixture-of-Experts (MoE) architecture, which activates only a subset of its 671 billion parameters for each task. This approach ensures efficiency without compromising performance. For example, during a coding task, only 37 billion parameters are activated, reducing computational costs while maintaining high accuracy.

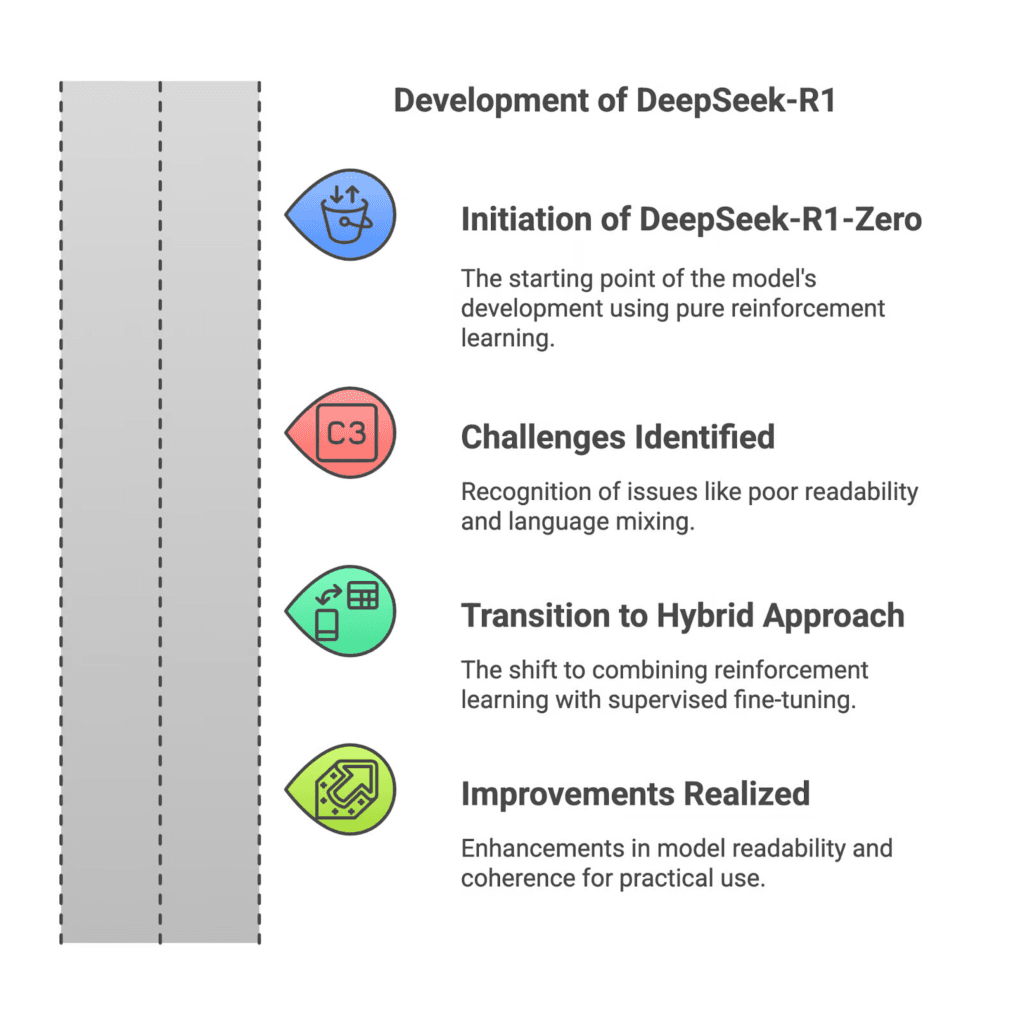

Reinforcement Learning (RL)-First Approach

Unlike traditional LLMs that rely heavily on supervised fine-tuning (SFT), DeepSeek-R1 prioritizes reinforcement learning (RL). This RL-first approach minimizes dependency on labeled data, making the model more adaptable to diverse tasks. By rewarding accurate outputs and penalizing incoherent ones, DeepSeek-R1 achieves superior reasoning capabilities.

Group Relative Policy Optimization (GRPO)

One of DeepSeek-R1’s most innovative features is its Group Relative Policy Optimization (GRPO). This technique enhances reasoning by grouping similar tasks and optimizing policies across them. For instance, in medical diagnosis, GRPO ensures that the model consistently provides accurate and coherent responses, even when faced with ambiguous inputs.

Distillation for Cost-Effective Deployment

DeepSeek-R1’s architecture also supports model distillation, enabling the creation of smaller, more efficient versions of the model. These distilled models, ranging from 1.5 billion to 70 billion parameters, retain 80% of the original model’s accuracy while significantly reducing deployment costs. This makes DeepSeek-R1 accessible to startups and small businesses with limited resources.

3. Performance & Cost Efficiency

DeepSeek-R1’s performance benchmarks are nothing short of impressive. Let’s dive into the numbers and see how it stacks up against its competitors.

Benchmark Dominance

- Mathematical Reasoning: Achieves a 97.3% accuracy on the MATH-500 benchmark, outperforming GPT-4’s 95.6%.

- Coding Proficiency: Scores in the 96.3rd percentile on Codeforces, a competitive programming platform.

- Long-Context Understanding: Supports a 128,000-token context window, enabling it to process lengthy documents like legal contracts or research papers with ease.

Cost Efficiency

- Training Costs: DeepSeek-R1 was trained for just $5.6 million, compared to GPT-4’s $100 million budget.

- API Pricing: At $0.14 per million input tokens (with cache hits), DeepSeek-R1 is significantly more affordable than OpenAI’s $15 per million tokens.

Case Study: Medical Reasoning

A recent case study demonstrated DeepSeek-R1’s potential in medical reasoning. By fine-tuning the model using LoRA (Low-Rank Adaptation), researchers achieved a 79.8% pass rate on the AIME 2024 benchmark, a significant improvement over previous models. This highlights DeepSeek-R1’s versatility and potential for domain-specific applications.

4. Use Cases & Applications

DeepSeek-R1’s versatility makes it suitable for a wide range of applications. Here are some of the most promising use cases.

Enterprise Solutions

- CogniFlow Assistants: DeepSeek-R1 powers CogniFlow, a suite of AI assistants designed for long-context analysis. These assistants can process legal contracts, medical reports, and financial documents with unparalleled accuracy.

- Hybrid Workflows: By combining DeepSeek-R1’s code generation capabilities with human review, enterprises can automate repetitive tasks while minimizing errors.

Creative & Technical Tasks

- Code Debugging: DeepSeek-R1’s coding proficiency makes it an invaluable tool for developers. It can identify and fix bugs in real-time, reducing development time and costs.

- Technical Writing: From API documentation to research papers, DeepSeek-R1 excels at generating clear and concise technical content.

- Interactive Storytelling: Game developers are leveraging DeepSeek-R1 to create dynamic NPCs (non-player characters) with branching narratives, enhancing player immersion.

5. Fine-Tuning Guide

Fine-tuning DeepSeek-R1 for specific tasks is straightforward, thanks to its open-source nature and robust architecture. Here’s a step-by-step guide to getting started.

Step 1: Dataset Preparation

Curate a structured dataset with prompts, chain-of-thought reasoning, and desired outputs. For example, if fine-tuning for medical diagnosis, include questions, diagnostic steps, and final diagnoses.

Step 2: LoRA Configuration

Use LoRA (Low-Rank Adaptation) to fine-tune specific transformer layers, such as the query projection and feed-forward layers. This targeted approach ensures efficiency and reduces computational costs.

Step 3: Training Tools

Leverage tools like Hugging Face, PyTorch, and Weights & Biases to streamline the training process. These platforms provide pre-built pipelines and visualization tools for tracking progress.

Step 4: Evaluation

Test the fine-tuned model on domain-specific tasks. For instance, evaluate medical reasoning accuracy using benchmarks like AIME 2024.

6. Ethical & Market Implications

While DeepSeek-R1 offers immense potential, it also raises important ethical and market considerations.

Open-Source Democratization

DeepSeek-R1’s open-source nature has democratized access to cutting-edge AI technology. With over 10 million downloads on Hugging Face, it has empowered startups and researchers worldwide.

Ethical Risks

- Bias Mitigation: DeepSeek-R1 incorporates language consistency rewards to minimize bias. However, ongoing monitoring is essential to ensure fairness.

- Censorship Concerns: As a Chinese-origin model, DeepSeek-R1 has faced scrutiny over potential censorship and data privacy issues.

Market Impact

DeepSeek-R1 has accelerated innovation cycles, with tech giants like Tencent replicating its architecture within 1–2 months. This rapid adoption underscores its transformative potential.

7. Future Outlook

The future of DeepSeek-R1 and open-source LLMs is bright, but not without challenges.

AGI Speculation

DeepSeek-R1’s inference-as-training paradigm hints at the possibility of self-improving AI systems, bringing us closer to artificial general intelligence (AGI).

Regulatory Challenges

With the rapid advancement of AI, regulatory crackdowns are likely. Experts predict a 70% probability of stricter regulations by 2027.

Distillation Trends

The trend toward smaller, more efficient models will continue, with distilled versions of DeepSeek-R1 achieving 80% accuracy at one-third the cost.

8. Key Takeaways

- For Developers: Leverage DeepSeek-R1’s API or distilled models for cost-efficient scaling.

- For Businesses: Adopt hybrid human-AI workflows to balance automation and oversight.

- For Researchers: Explore RL-first training and GRPO for reasoning-focused models.

9. Resources & Next Steps

Ready to dive into DeepSeek-R1? Here are some resources to get started:

- Tools: Ollama for local deployment, HuggingFace for distilled models.

- Tutorials: Fine-tuning guides from DataCamp and Geeky Gadgets.

- Community: Join HuggingFace projects to reproduce DeepSeek-R1’s architecture.

DeepSeek-R1 is more than just an LLM; it’s a catalyst for innovation. By democratizing access to cutting-edge AI, it empowers individuals and organizations to push the boundaries of what’s possible. Whether you’re a developer, business leader, or researcher, now is the time to embrace the open-source revolution and unlock the full potential of DeepSeek-R1.